Chapter 7 Checking Convergence

It is important to ensure that we have included enough simulations in the MSE for the results to be stable.

The Converge function can be used to confirm that the number of simulations is sufficient and the MSE model has converged, by which we mean that the relative position of the Management Procedures are stable with respect to different performance metrics and the performance statistics have stablized, i.e., they won’t change significantly if the model was run with more simulations.

The purpose of the Converge function is to answer the question: have I run enough simulations?

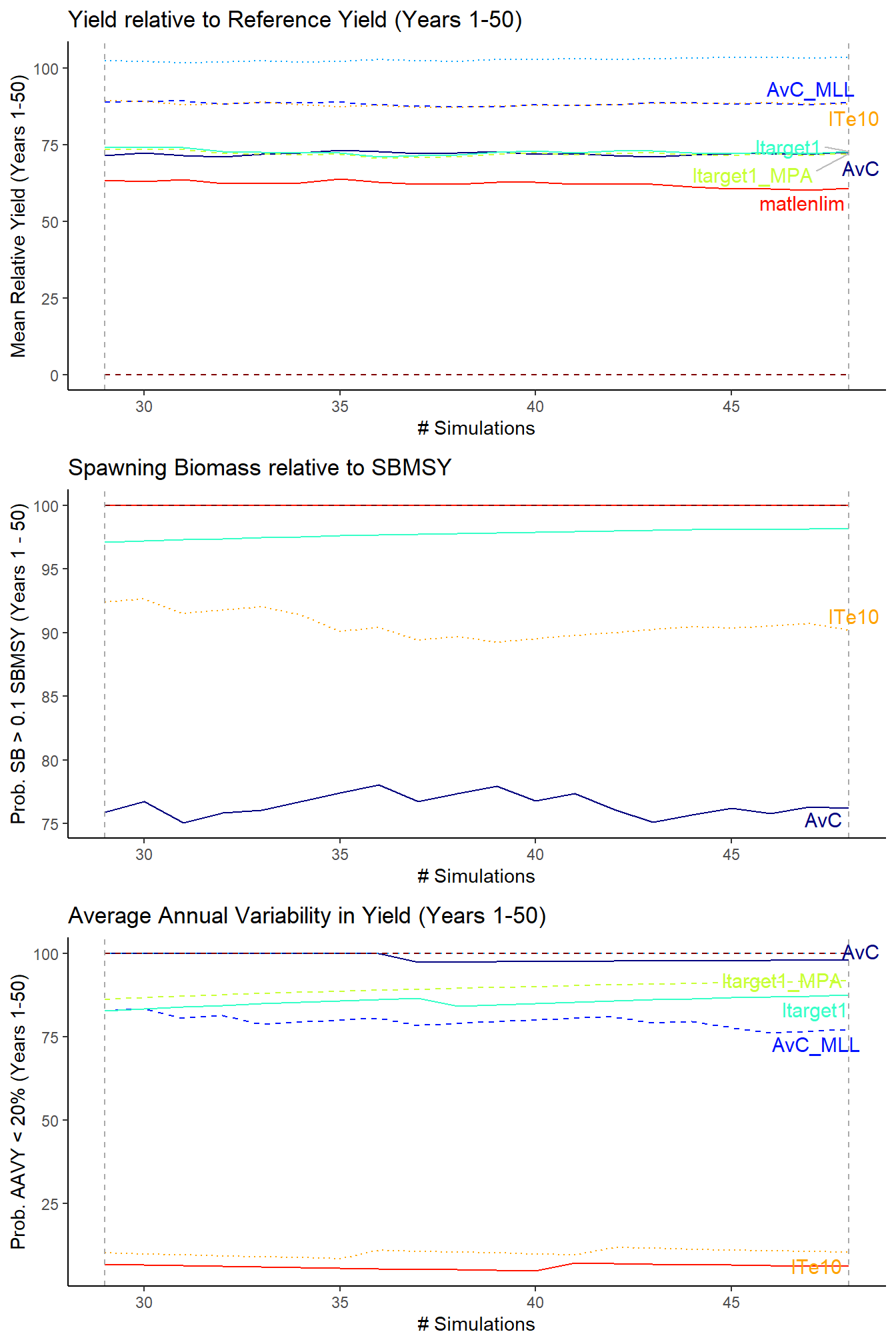

By default the Converge function includes three commonly used performance metrics, and plots the performance statistics against the number of simulations. The convergence diagnostics are:

- Does the order of the MPs change as more simulations are added? By default this is calculated over the last 20 simulations.

- Is the average difference in the performance statistic over the last 20 simulations changing by more than 2%?

The number of simulations to calculate the convergence statistics, the minimum change threshold, and the performance metrics to use can be specified as arguments to the function. See the help documentation for more details (?Converge).

## Checking if order of MPs is changing in last 20 iterations## Checking average difference in PM over last 20 iterations is > 0.5## Plotting MPs 1 - 8##

## Yield relative to Reference Yield (Years 1-50)## Order over last 20 iterations is not consistent for:

## AvC

## Order over last 20 iterations is not consistent for:

## AvC_MLL

## Order over last 20 iterations is not consistent for:

## Itarget1

## Order over last 20 iterations is not consistent for:

## Itarget1_MPA

## Order over last 20 iterations is not consistent for:

## ITe10## Mean difference over last 20 iterations is > 0.5 for:

## AvC

## Mean difference over last 20 iterations is > 0.5 for:

## Itarget1

## Mean difference over last 20 iterations is > 0.5 for:

## matlenlim

## Mean difference over last 20 iterations is > 0.5 for:

## Itarget1_MPA##

## Spawning Biomass relative to SBMSY## Mean difference over last 20 iterations is > 0.5 for:

## AvC

## Mean difference over last 20 iterations is > 0.5 for:

## ITe10##

## Average Annual Variability in Yield (Years 1-50)## Order over last 20 iterations is not consistent for:

## AvC_MLL

## Order over last 20 iterations is not consistent for:

## Itarget1## Mean difference over last 20 iterations is > 0.5 for:

## AvC

## Mean difference over last 20 iterations is > 0.5 for:

## Itarget1

## Mean difference over last 20 iterations is > 0.5 for:

## ITe10

## Mean difference over last 20 iterations is > 0.5 for:

## AvC_MLL

## Mean difference over last 20 iterations is > 0.5 for:

## Itarget1_MPA

## Yield P10 AAVY

## MPs Not Converging 4 2 5

## Unstable MPs 5 0 2Have we run enough simulations?

The convergence plot reveals that both the order of the MPs and the performance statistics are not stable. This suggests that 48 simulations is not enough to produce reliable results.

Let’s increase the number of simulations and try again:

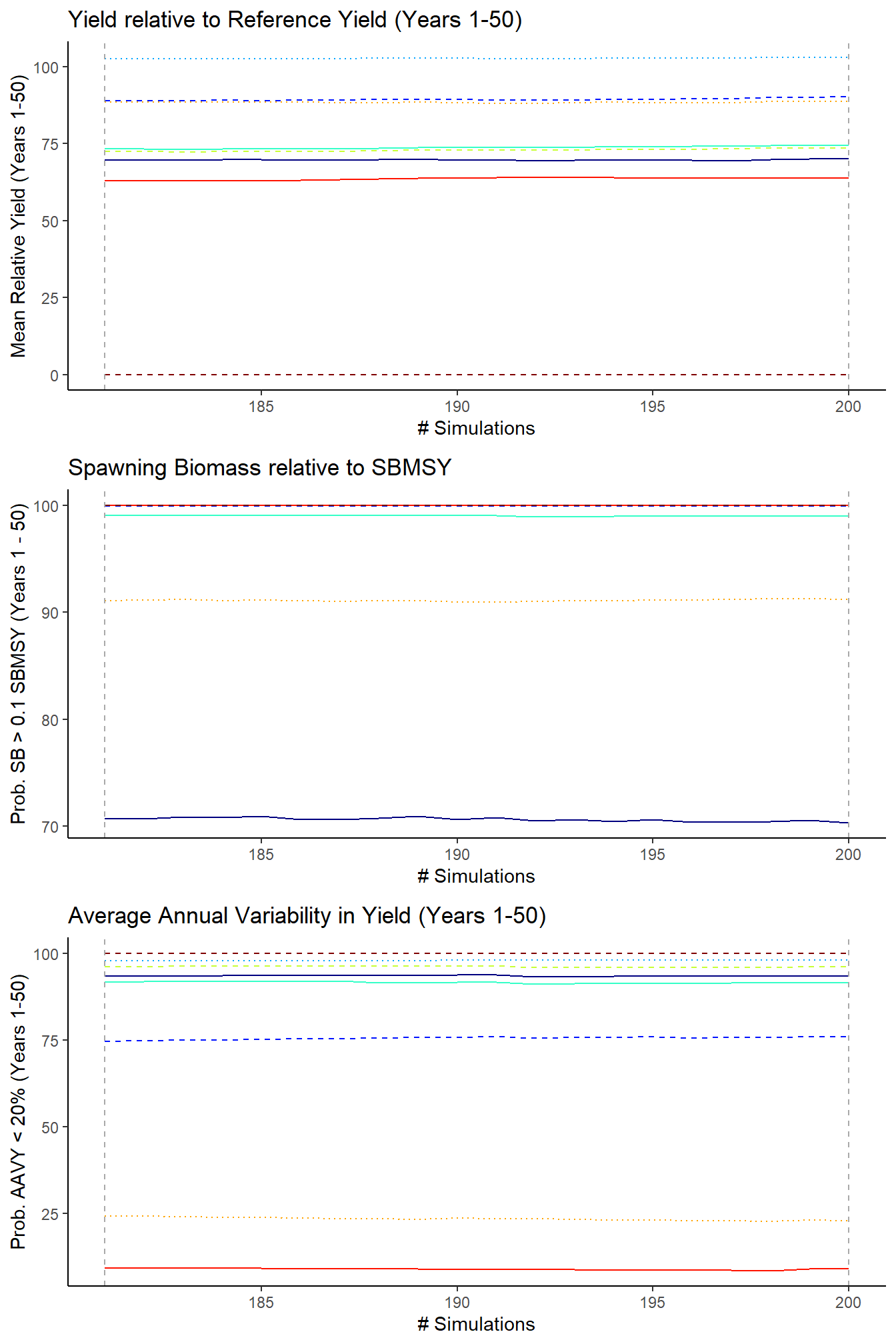

Is 200 simulations enough?

## Checking if order of MPs is changing in last 20 iterations## Checking average difference in PM over last 20 iterations is > 0.5## Plotting MPs 1 - 8

## Yield P10 AAVY

## MPs Not Converging 0 0 0

## Unstable MPs 0 0 0